library(rethinking)

library(cmdstanr)

library(brms)

# por si uso splines

library(mgcv)

# uso cmdstan como backend para stan desde R en vez de rstan

set_cmdstan_path("/home/jose/cmdstan")

set_ulam_cmdstan(TRUE)Una regresión de poisson, plagiando a Carlos

Me llamó la atención ayer el excelente post de Carlos sobre regresión de poisson casi trivival con numpyro y le dije que iba a ver si podía replicarlo usando brms u otra cosa que se comunique con stan. También estuve bicheando su código que tiene colgado aquí

Lo primero, es que se trata de un ejercicio relativamente común, que es la de estimar el punto en que una serie de datos cambia de tendencia. Existen cosas como prophet (que por debajo es Stan) o librerías como mcp cmp o changepoint en R específicas para este tipo de cosas. Pero a Carlos le gusta , con buen criterio, especificar directamente el modelo.

He de reconocer que me ha gustado la sintaxis de numpyro

Bueno vamos al lío.

Datos y librerías

Voy implementar los dos primeros modelos que hay en el notebook de Carlos usando la función ulam de la librería rethinking de Richard McElreath. Dicha librería es un interfaz, incompleto aunque didáctico, de hacer modelos bayesianos con Stan.

El último modelo, el que detecta el punto de cambio no he sido capaz de hacerlo con esta librería, pero se podría hacer usando stan directamente, como aquí.

Los datos, aunque él ha puesto la variable del tiempo de 1 a 23, yo la voy a poner de 1999 a 2021, porque intuía a qué datos se refería y que los he buscado.

d <- list(

y = c(54, 63, 50, 54, 71, 72, 57, 69, 71,

76, 57, 73, 62, 51, 54, 55, 60, 49,

50, 53, 56, 49, 48),

t = 1999:2021

)Modelo lambda constante

m0 <- ulam(

alist(y ~ poisson(lambda),

lambda <- a ,

a ~ normal(60, 5)

) ,

data = d,

chains = 2,

cores = 2 ,

sample = TRUE,

iter = 3000

)

#> Running MCMC with 2 parallel chains, with 1 thread(s) per chain...

#>

#> Chain 1 Iteration: 1 / 3000 [ 0%] (Warmup)

#> Chain 1 Iteration: 100 / 3000 [ 3%] (Warmup)

#> Chain 1 Iteration: 200 / 3000 [ 6%] (Warmup)

#> Chain 1 Iteration: 300 / 3000 [ 10%] (Warmup)

#> Chain 1 Iteration: 400 / 3000 [ 13%] (Warmup)

#> Chain 1 Iteration: 500 / 3000 [ 16%] (Warmup)

#> Chain 1 Iteration: 600 / 3000 [ 20%] (Warmup)

#> Chain 1 Iteration: 700 / 3000 [ 23%] (Warmup)

#> Chain 1 Iteration: 800 / 3000 [ 26%] (Warmup)

#> Chain 1 Iteration: 900 / 3000 [ 30%] (Warmup)

#> Chain 1 Iteration: 1000 / 3000 [ 33%] (Warmup)

#> Chain 1 Iteration: 1100 / 3000 [ 36%] (Warmup)

#> Chain 1 Iteration: 1200 / 3000 [ 40%] (Warmup)

#> Chain 1 Iteration: 1300 / 3000 [ 43%] (Warmup)

#> Chain 1 Iteration: 1400 / 3000 [ 46%] (Warmup)

#> Chain 1 Iteration: 1500 / 3000 [ 50%] (Warmup)

#> Chain 1 Iteration: 1501 / 3000 [ 50%] (Sampling)

#> Chain 1 Iteration: 1600 / 3000 [ 53%] (Sampling)

#> Chain 1 Iteration: 1700 / 3000 [ 56%] (Sampling)

#> Chain 1 Iteration: 1800 / 3000 [ 60%] (Sampling)

#> Chain 1 Iteration: 1900 / 3000 [ 63%] (Sampling)

#> Chain 1 Iteration: 2000 / 3000 [ 66%] (Sampling)

#> Chain 1 Iteration: 2100 / 3000 [ 70%] (Sampling)

#> Chain 1 Iteration: 2200 / 3000 [ 73%] (Sampling)

#> Chain 1 Iteration: 2300 / 3000 [ 76%] (Sampling)

#> Chain 1 Iteration: 2400 / 3000 [ 80%] (Sampling)

#> Chain 1 Iteration: 2500 / 3000 [ 83%] (Sampling)

#> Chain 1 Iteration: 2600 / 3000 [ 86%] (Sampling)

#> Chain 1 Iteration: 2700 / 3000 [ 90%] (Sampling)

#> Chain 1 Iteration: 2800 / 3000 [ 93%] (Sampling)

#> Chain 1 Iteration: 2900 / 3000 [ 96%] (Sampling)

#> Chain 1 Iteration: 3000 / 3000 [100%] (Sampling)

#> Chain 2 Iteration: 1 / 3000 [ 0%] (Warmup)

#> Chain 2 Iteration: 100 / 3000 [ 3%] (Warmup)

#> Chain 2 Iteration: 200 / 3000 [ 6%] (Warmup)

#> Chain 2 Iteration: 300 / 3000 [ 10%] (Warmup)

#> Chain 2 Iteration: 400 / 3000 [ 13%] (Warmup)

#> Chain 2 Iteration: 500 / 3000 [ 16%] (Warmup)

#> Chain 2 Iteration: 600 / 3000 [ 20%] (Warmup)

#> Chain 2 Iteration: 700 / 3000 [ 23%] (Warmup)

#> Chain 2 Iteration: 800 / 3000 [ 26%] (Warmup)

#> Chain 2 Iteration: 900 / 3000 [ 30%] (Warmup)

#> Chain 2 Iteration: 1000 / 3000 [ 33%] (Warmup)

#> Chain 2 Iteration: 1100 / 3000 [ 36%] (Warmup)

#> Chain 2 Iteration: 1200 / 3000 [ 40%] (Warmup)

#> Chain 2 Iteration: 1300 / 3000 [ 43%] (Warmup)

#> Chain 2 Iteration: 1400 / 3000 [ 46%] (Warmup)

#> Chain 2 Iteration: 1500 / 3000 [ 50%] (Warmup)

#> Chain 2 Iteration: 1501 / 3000 [ 50%] (Sampling)

#> Chain 2 Iteration: 1600 / 3000 [ 53%] (Sampling)

#> Chain 2 Iteration: 1700 / 3000 [ 56%] (Sampling)

#> Chain 2 Iteration: 1800 / 3000 [ 60%] (Sampling)

#> Chain 2 Iteration: 1900 / 3000 [ 63%] (Sampling)

#> Chain 2 Iteration: 2000 / 3000 [ 66%] (Sampling)

#> Chain 2 Iteration: 2100 / 3000 [ 70%] (Sampling)

#> Chain 2 Iteration: 2200 / 3000 [ 73%] (Sampling)

#> Chain 2 Iteration: 2300 / 3000 [ 76%] (Sampling)

#> Chain 2 Iteration: 2400 / 3000 [ 80%] (Sampling)

#> Chain 2 Iteration: 2500 / 3000 [ 83%] (Sampling)

#> Chain 2 Iteration: 2600 / 3000 [ 86%] (Sampling)

#> Chain 2 Iteration: 2700 / 3000 [ 90%] (Sampling)

#> Chain 2 Iteration: 2800 / 3000 [ 93%] (Sampling)

#> Chain 2 Iteration: 2900 / 3000 [ 96%] (Sampling)

#> Chain 2 Iteration: 3000 / 3000 [100%] (Sampling)

#> Chain 1 finished in 0.0 seconds.

#> Chain 2 finished in 0.0 seconds.

#>

#> Both chains finished successfully.

#> Mean chain execution time: 0.0 seconds.

#> Total execution time: 0.4 seconds.

precis(m0)

#> result

#> mean 58.976852

#> sd 1.542638

#> 5.5% 56.450528

#> 94.5% 61.456426

#> n_eff 1004.327368

#> Rhat 1.000203Modelo dónde lambda es una recta

m1 <- ulam(

alist(

y ~ poisson(lambda),

lambda <- a + b * t ,

a ~ normal(60, 5),

b ~ normal(0, 1)

) ,

data = d,

chains = 2,

cores = 2 ,

sample = TRUE,

iter = 3000

)

#> Running MCMC with 2 parallel chains, with 1 thread(s) per chain...

#>

#> Chain 1 Iteration: 1 / 3000 [ 0%] (Warmup)

#> Chain 1 Iteration: 100 / 3000 [ 3%] (Warmup)

#> Chain 1 Iteration: 200 / 3000 [ 6%] (Warmup)

#> Chain 1 Iteration: 300 / 3000 [ 10%] (Warmup)

#> Chain 1 Iteration: 400 / 3000 [ 13%] (Warmup)

#> Chain 1 Iteration: 500 / 3000 [ 16%] (Warmup)

#> Chain 2 Iteration: 1 / 3000 [ 0%] (Warmup)

#> Chain 2 Iteration: 100 / 3000 [ 3%] (Warmup)

#> Chain 2 Iteration: 200 / 3000 [ 6%] (Warmup)

#> Chain 2 Iteration: 300 / 3000 [ 10%] (Warmup)

#> Chain 2 Iteration: 400 / 3000 [ 13%] (Warmup)

#> Chain 2 Iteration: 500 / 3000 [ 16%] (Warmup)

#> Chain 2 Iteration: 600 / 3000 [ 20%] (Warmup)

#> Chain 1 Iteration: 600 / 3000 [ 20%] (Warmup)

#> Chain 1 Iteration: 700 / 3000 [ 23%] (Warmup)

#> Chain 1 Iteration: 800 / 3000 [ 26%] (Warmup)

#> Chain 1 Iteration: 900 / 3000 [ 30%] (Warmup)

#> Chain 1 Iteration: 1000 / 3000 [ 33%] (Warmup)

#> Chain 1 Iteration: 1100 / 3000 [ 36%] (Warmup)

#> Chain 1 Iteration: 1200 / 3000 [ 40%] (Warmup)

#> Chain 1 Iteration: 1300 / 3000 [ 43%] (Warmup)

#> Chain 1 Iteration: 1400 / 3000 [ 46%] (Warmup)

#> Chain 1 Iteration: 1500 / 3000 [ 50%] (Warmup)

#> Chain 1 Iteration: 1501 / 3000 [ 50%] (Sampling)

#> Chain 1 Iteration: 1600 / 3000 [ 53%] (Sampling)

#> Chain 1 Iteration: 1700 / 3000 [ 56%] (Sampling)

#> Chain 1 Iteration: 1800 / 3000 [ 60%] (Sampling)

#> Chain 1 Iteration: 1900 / 3000 [ 63%] (Sampling)

#> Chain 1 Iteration: 2000 / 3000 [ 66%] (Sampling)

#> Chain 1 Iteration: 2100 / 3000 [ 70%] (Sampling)

#> Chain 1 Iteration: 2200 / 3000 [ 73%] (Sampling)

#> Chain 1 Iteration: 2300 / 3000 [ 76%] (Sampling)

#> Chain 1 Iteration: 2400 / 3000 [ 80%] (Sampling)

#> Chain 1 Iteration: 2500 / 3000 [ 83%] (Sampling)

#> Chain 1 Iteration: 2600 / 3000 [ 86%] (Sampling)

#> Chain 1 Iteration: 2700 / 3000 [ 90%] (Sampling)

#> Chain 1 Iteration: 2800 / 3000 [ 93%] (Sampling)

#> Chain 1 Iteration: 2900 / 3000 [ 96%] (Sampling)

#> Chain 1 Iteration: 3000 / 3000 [100%] (Sampling)

#> Chain 2 Iteration: 700 / 3000 [ 23%] (Warmup)

#> Chain 2 Iteration: 800 / 3000 [ 26%] (Warmup)

#> Chain 2 Iteration: 900 / 3000 [ 30%] (Warmup)

#> Chain 2 Iteration: 1000 / 3000 [ 33%] (Warmup)

#> Chain 2 Iteration: 1100 / 3000 [ 36%] (Warmup)

#> Chain 2 Iteration: 1200 / 3000 [ 40%] (Warmup)

#> Chain 2 Iteration: 1300 / 3000 [ 43%] (Warmup)

#> Chain 2 Iteration: 1400 / 3000 [ 46%] (Warmup)

#> Chain 2 Iteration: 1500 / 3000 [ 50%] (Warmup)

#> Chain 2 Iteration: 1501 / 3000 [ 50%] (Sampling)

#> Chain 2 Iteration: 1600 / 3000 [ 53%] (Sampling)

#> Chain 2 Iteration: 1700 / 3000 [ 56%] (Sampling)

#> Chain 2 Iteration: 1800 / 3000 [ 60%] (Sampling)

#> Chain 2 Iteration: 1900 / 3000 [ 63%] (Sampling)

#> Chain 2 Iteration: 2000 / 3000 [ 66%] (Sampling)

#> Chain 2 Iteration: 2100 / 3000 [ 70%] (Sampling)

#> Chain 2 Iteration: 2200 / 3000 [ 73%] (Sampling)

#> Chain 2 Iteration: 2300 / 3000 [ 76%] (Sampling)

#> Chain 2 Iteration: 2400 / 3000 [ 80%] (Sampling)

#> Chain 2 Iteration: 2500 / 3000 [ 83%] (Sampling)

#> Chain 2 Iteration: 2600 / 3000 [ 86%] (Sampling)

#> Chain 2 Iteration: 2700 / 3000 [ 90%] (Sampling)

#> Chain 2 Iteration: 2800 / 3000 [ 93%] (Sampling)

#> Chain 2 Iteration: 2900 / 3000 [ 96%] (Sampling)

#> Chain 2 Iteration: 3000 / 3000 [100%] (Sampling)

#> Chain 1 finished in 0.2 seconds.

#> Chain 2 finished in 0.2 seconds.

#>

#> Both chains finished successfully.

#> Mean chain execution time: 0.2 seconds.

#> Total execution time: 0.3 seconds.

precis(m1)

#> mean sd 5.5% 94.5% n_eff Rhat4

#> a 60.2808693333 5.071538760 52.390828500 68.641990000 454.4379 1.005453

#> b -0.0007081383 0.002636486 -0.005088067 0.003417291 452.2575 1.005254Modelo para detectar el punto de cambio.

La verdad que me habría gustado seguir usando ulam para el modelo pero he sido incapaz de replicar este cacho de código de numpyro

def model02(t, datos):

knot = numpyro.sample("knot", dist.Normal(len(t)/2, len(t)/4))

a0 = numpyro.sample("a0", dist.Normal(60, 5))

b0 = numpyro.sample("b0", dist.Normal( 0, 1))

b1 = numpyro.sample("b1", dist.Normal(0, 1))

λ = a0 + t * b0 + jnp.where(t > knot, (t - knot) * b1, 0)

with numpyro.plate("data", len(t)):

numpyro.sample("obs", dist.Poisson(λ), obs=datos)

El problema reside en jnp.where(t > knot, (t - knot) * b1, 0) , para resolverlo habría que programar directamente en stan o que ulam pudiera usar la función step de Stan, que si x es menor que 0 vale 0 y 1 en otro caso. El código en ulam si funcionara sería así

m2 <- ulam(

alist(

y ~ poisson(lambda),

lambda <- b0 + b1 * t + b2 * (t - knot) * step(t - knot) ,

knot ~ normal(23/2, 23/4),

b0 ~ normal(60, 5),

b1 ~ normal(0, 1),

b2 ~ normal(0, 1),

) ,

data=d, chains=2, cores=1 , sample=TRUE,

iter = 3000)pero no funciona , así que vamos a ver como sería usando brms. brms tiene una sintaxis digamos que peculiar, y su objetivo es parecerse a la especificación que se hace en R de los modelos lineales con lm y la que se hace con lme4. Es decir sería algo similar a esto

brm( y ~ x1 + x2 + (1 | var_efecto_aleatorio) ,

family = poisson("identity"),

data = df)dónde no se especifican los \(\beta_i\) de forma explícita. Pero también tiene sintaxis para hacerlo explícito. Veamos.

# Datos en dataframe

df <- data.frame(y = d$y, t = d$t)bform <- bf(

y ~ b0 + b1 * t +

# brms si acepta la función step de Stan

# cuando t-change >= 0 entonces step(t-change) = 1, es decir, cuanto t > change y 0 en otro caso

b2 * (t-change) * step( t - change),

# Hay que poner que estime el "intercept" de los parámetros y el nl = TRUE para que brms sepa que son parámetros

# y no variables en los datos

b0 ~ 1,

b1 ~ 1,

b2 ~ 1,

change ~ 1,

nl = TRUE

)Especificamos las priors. En brms se escriben las priors y se “concatenan” mediante +

bprior <- prior(normal(60, 5), nlpar = "b0") +

prior(normal(0, 1), nlpar = "b1") +

prior(normal(0, 1), nlpar = "b2") +

# para el cambio ponemos como media la mitad del intervalo y unos 5 años de desviación típica

prior(normal( 2010, 23/4), nlpar = "change")Y ya podemos escribir el modelo en brms que lo compilara a stan y hace el mcmc

mbrm <-

brm(

bform,

family = poisson("identity"), # pongo de link la identidad en vez del log por hacer como en el post original

prior = bprior,

data = df,

backend = "cmdstanr",

cores = 6,

chains = 6,

iter = 4000,

warmup = 500

)

#> Running MCMC with 6 parallel chains...

#>

#> Chain 1 Iteration: 1 / 4000 [ 0%] (Warmup)

#> Chain 1 Iteration: 100 / 4000 [ 2%] (Warmup)

#> Chain 1 Iteration: 200 / 4000 [ 5%] (Warmup)

#> Chain 2 Iteration: 1 / 4000 [ 0%] (Warmup)

#> Chain 2 Iteration: 100 / 4000 [ 2%] (Warmup)

#> Chain 2 Iteration: 200 / 4000 [ 5%] (Warmup)

#> Chain 3 Iteration: 1 / 4000 [ 0%] (Warmup)

#> Chain 4 Iteration: 1 / 4000 [ 0%] (Warmup)

#> Chain 5 Iteration: 1 / 4000 [ 0%] (Warmup)

#> Chain 6 Iteration: 1 / 4000 [ 0%] (Warmup)

#> Chain 3 Iteration: 100 / 4000 [ 2%] (Warmup)

#> Chain 3 Iteration: 200 / 4000 [ 5%] (Warmup)

#> Chain 5 Iteration: 100 / 4000 [ 2%] (Warmup)

#> Chain 3 Iteration: 300 / 4000 [ 7%] (Warmup)

#> Chain 4 Iteration: 100 / 4000 [ 2%] (Warmup)

#> Chain 4 Iteration: 200 / 4000 [ 5%] (Warmup)

#> Chain 5 Iteration: 200 / 4000 [ 5%] (Warmup)

#> Chain 1 Iteration: 300 / 4000 [ 7%] (Warmup)

#> Chain 6 Iteration: 100 / 4000 [ 2%] (Warmup)

#> Chain 6 Iteration: 200 / 4000 [ 5%] (Warmup)

#> Chain 2 Iteration: 300 / 4000 [ 7%] (Warmup)

#> Chain 3 Iteration: 400 / 4000 [ 10%] (Warmup)

#> Chain 5 Iteration: 300 / 4000 [ 7%] (Warmup)

#> Chain 1 Iteration: 400 / 4000 [ 10%] (Warmup)

#> Chain 2 Iteration: 400 / 4000 [ 10%] (Warmup)

#> Chain 3 Iteration: 500 / 4000 [ 12%] (Warmup)

#> Chain 3 Iteration: 501 / 4000 [ 12%] (Sampling)

#> Chain 6 Iteration: 300 / 4000 [ 7%] (Warmup)

#> Chain 5 Iteration: 400 / 4000 [ 10%] (Warmup)

#> Chain 2 Iteration: 500 / 4000 [ 12%] (Warmup)

#> Chain 2 Iteration: 501 / 4000 [ 12%] (Sampling)

#> Chain 2 Iteration: 600 / 4000 [ 15%] (Sampling)

#> Chain 4 Iteration: 300 / 4000 [ 7%] (Warmup)

#> Chain 1 Iteration: 500 / 4000 [ 12%] (Warmup)

#> Chain 1 Iteration: 501 / 4000 [ 12%] (Sampling)

#> Chain 2 Iteration: 700 / 4000 [ 17%] (Sampling)

#> Chain 2 Iteration: 800 / 4000 [ 20%] (Sampling)

#> Chain 2 Iteration: 900 / 4000 [ 22%] (Sampling)

#> Chain 2 Iteration: 1000 / 4000 [ 25%] (Sampling)

#> Chain 5 Iteration: 500 / 4000 [ 12%] (Warmup)

#> Chain 5 Iteration: 501 / 4000 [ 12%] (Sampling)

#> Chain 5 Iteration: 600 / 4000 [ 15%] (Sampling)

#> Chain 5 Iteration: 700 / 4000 [ 17%] (Sampling)

#> Chain 5 Iteration: 800 / 4000 [ 20%] (Sampling)

#> Chain 1 Iteration: 600 / 4000 [ 15%] (Sampling)

#> Chain 1 Iteration: 700 / 4000 [ 17%] (Sampling)

#> Chain 2 Iteration: 1100 / 4000 [ 27%] (Sampling)

#> Chain 2 Iteration: 1200 / 4000 [ 30%] (Sampling)

#> Chain 2 Iteration: 1300 / 4000 [ 32%] (Sampling)

#> Chain 3 Iteration: 600 / 4000 [ 15%] (Sampling)

#> Chain 5 Iteration: 900 / 4000 [ 22%] (Sampling)

#> Chain 5 Iteration: 1000 / 4000 [ 25%] (Sampling)

#> Chain 5 Iteration: 1100 / 4000 [ 27%] (Sampling)

#> Chain 5 Iteration: 1200 / 4000 [ 30%] (Sampling)

#> Chain 1 Iteration: 800 / 4000 [ 20%] (Sampling)

#> Chain 1 Iteration: 900 / 4000 [ 22%] (Sampling)

#> Chain 2 Iteration: 1400 / 4000 [ 35%] (Sampling)

#> Chain 2 Iteration: 1500 / 4000 [ 37%] (Sampling)

#> Chain 2 Iteration: 1600 / 4000 [ 40%] (Sampling)

#> Chain 2 Iteration: 1700 / 4000 [ 42%] (Sampling)

#> Chain 5 Iteration: 1300 / 4000 [ 32%] (Sampling)

#> Chain 5 Iteration: 1400 / 4000 [ 35%] (Sampling)

#> Chain 5 Iteration: 1500 / 4000 [ 37%] (Sampling)

#> Chain 5 Iteration: 1600 / 4000 [ 40%] (Sampling)

#> Chain 1 Iteration: 1000 / 4000 [ 25%] (Sampling)

#> Chain 1 Iteration: 1100 / 4000 [ 27%] (Sampling)

#> Chain 1 Iteration: 1200 / 4000 [ 30%] (Sampling)

#> Chain 2 Iteration: 1800 / 4000 [ 45%] (Sampling)

#> Chain 2 Iteration: 1900 / 4000 [ 47%] (Sampling)

#> Chain 2 Iteration: 2000 / 4000 [ 50%] (Sampling)

#> Chain 2 Iteration: 2100 / 4000 [ 52%] (Sampling)

#> Chain 5 Iteration: 1700 / 4000 [ 42%] (Sampling)

#> Chain 5 Iteration: 1800 / 4000 [ 45%] (Sampling)

#> Chain 5 Iteration: 1900 / 4000 [ 47%] (Sampling)

#> Chain 5 Iteration: 2000 / 4000 [ 50%] (Sampling)

#> Chain 6 Iteration: 400 / 4000 [ 10%] (Warmup)

#> Chain 1 Iteration: 1300 / 4000 [ 32%] (Sampling)

#> Chain 1 Iteration: 1400 / 4000 [ 35%] (Sampling)

#> Chain 2 Iteration: 2200 / 4000 [ 55%] (Sampling)

#> Chain 2 Iteration: 2300 / 4000 [ 57%] (Sampling)

#> Chain 2 Iteration: 2400 / 4000 [ 60%] (Sampling)

#> Chain 5 Iteration: 2100 / 4000 [ 52%] (Sampling)

#> Chain 5 Iteration: 2200 / 4000 [ 55%] (Sampling)

#> Chain 5 Iteration: 2300 / 4000 [ 57%] (Sampling)

#> Chain 5 Iteration: 2400 / 4000 [ 60%] (Sampling)

#> Chain 1 Iteration: 1500 / 4000 [ 37%] (Sampling)

#> Chain 1 Iteration: 1600 / 4000 [ 40%] (Sampling)

#> Chain 2 Iteration: 2500 / 4000 [ 62%] (Sampling)

#> Chain 2 Iteration: 2600 / 4000 [ 65%] (Sampling)

#> Chain 2 Iteration: 2700 / 4000 [ 67%] (Sampling)

#> Chain 5 Iteration: 2500 / 4000 [ 62%] (Sampling)

#> Chain 5 Iteration: 2600 / 4000 [ 65%] (Sampling)

#> Chain 5 Iteration: 2700 / 4000 [ 67%] (Sampling)

#> Chain 5 Iteration: 2800 / 4000 [ 70%] (Sampling)

#> Chain 1 Iteration: 1700 / 4000 [ 42%] (Sampling)

#> Chain 1 Iteration: 1800 / 4000 [ 45%] (Sampling)

#> Chain 1 Iteration: 1900 / 4000 [ 47%] (Sampling)

#> Chain 2 Iteration: 2800 / 4000 [ 70%] (Sampling)

#> Chain 2 Iteration: 2900 / 4000 [ 72%] (Sampling)

#> Chain 2 Iteration: 3000 / 4000 [ 75%] (Sampling)

#> Chain 5 Iteration: 2900 / 4000 [ 72%] (Sampling)

#> Chain 5 Iteration: 3000 / 4000 [ 75%] (Sampling)

#> Chain 5 Iteration: 3100 / 4000 [ 77%] (Sampling)

#> Chain 5 Iteration: 3200 / 4000 [ 80%] (Sampling)

#> Chain 1 Iteration: 2000 / 4000 [ 50%] (Sampling)

#> Chain 1 Iteration: 2100 / 4000 [ 52%] (Sampling)

#> Chain 1 Iteration: 2200 / 4000 [ 55%] (Sampling)

#> Chain 2 Iteration: 3100 / 4000 [ 77%] (Sampling)

#> Chain 2 Iteration: 3200 / 4000 [ 80%] (Sampling)

#> Chain 2 Iteration: 3300 / 4000 [ 82%] (Sampling)

#> Chain 2 Iteration: 3400 / 4000 [ 85%] (Sampling)

#> Chain 5 Iteration: 3300 / 4000 [ 82%] (Sampling)

#> Chain 5 Iteration: 3400 / 4000 [ 85%] (Sampling)

#> Chain 5 Iteration: 3500 / 4000 [ 87%] (Sampling)

#> Chain 6 Iteration: 500 / 4000 [ 12%] (Warmup)

#> Chain 6 Iteration: 501 / 4000 [ 12%] (Sampling)

#> Chain 6 Iteration: 600 / 4000 [ 15%] (Sampling)

#> Chain 1 Iteration: 2300 / 4000 [ 57%] (Sampling)

#> Chain 1 Iteration: 2400 / 4000 [ 60%] (Sampling)

#> Chain 1 Iteration: 2500 / 4000 [ 62%] (Sampling)

#> Chain 2 Iteration: 3500 / 4000 [ 87%] (Sampling)

#> Chain 2 Iteration: 3600 / 4000 [ 90%] (Sampling)

#> Chain 2 Iteration: 3700 / 4000 [ 92%] (Sampling)

#> Chain 3 Iteration: 700 / 4000 [ 17%] (Sampling)

#> Chain 4 Iteration: 400 / 4000 [ 10%] (Warmup)

#> Chain 5 Iteration: 3600 / 4000 [ 90%] (Sampling)

#> Chain 5 Iteration: 3700 / 4000 [ 92%] (Sampling)

#> Chain 5 Iteration: 3800 / 4000 [ 95%] (Sampling)

#> Chain 5 Iteration: 3900 / 4000 [ 97%] (Sampling)

#> Chain 6 Iteration: 700 / 4000 [ 17%] (Sampling)

#> Chain 6 Iteration: 800 / 4000 [ 20%] (Sampling)

#> Chain 6 Iteration: 900 / 4000 [ 22%] (Sampling)

#> Chain 1 Iteration: 2600 / 4000 [ 65%] (Sampling)

#> Chain 1 Iteration: 2700 / 4000 [ 67%] (Sampling)

#> Chain 1 Iteration: 2800 / 4000 [ 70%] (Sampling)

#> Chain 2 Iteration: 3800 / 4000 [ 95%] (Sampling)

#> Chain 2 Iteration: 3900 / 4000 [ 97%] (Sampling)

#> Chain 2 Iteration: 4000 / 4000 [100%] (Sampling)

#> Chain 5 Iteration: 4000 / 4000 [100%] (Sampling)

#> Chain 6 Iteration: 1000 / 4000 [ 25%] (Sampling)

#> Chain 6 Iteration: 1100 / 4000 [ 27%] (Sampling)

#> Chain 6 Iteration: 1200 / 4000 [ 30%] (Sampling)

#> Chain 2 finished in 2.5 seconds.

#> Chain 5 finished in 2.3 seconds.

#> Chain 1 Iteration: 2900 / 4000 [ 72%] (Sampling)

#> Chain 1 Iteration: 3000 / 4000 [ 75%] (Sampling)

#> Chain 1 Iteration: 3100 / 4000 [ 77%] (Sampling)

#> Chain 1 Iteration: 3200 / 4000 [ 80%] (Sampling)

#> Chain 6 Iteration: 1300 / 4000 [ 32%] (Sampling)

#> Chain 6 Iteration: 1400 / 4000 [ 35%] (Sampling)

#> Chain 6 Iteration: 1500 / 4000 [ 37%] (Sampling)

#> Chain 6 Iteration: 1600 / 4000 [ 40%] (Sampling)

#> Chain 6 Iteration: 1700 / 4000 [ 42%] (Sampling)

#> Chain 1 Iteration: 3300 / 4000 [ 82%] (Sampling)

#> Chain 1 Iteration: 3400 / 4000 [ 85%] (Sampling)

#> Chain 1 Iteration: 3500 / 4000 [ 87%] (Sampling)

#> Chain 4 Iteration: 500 / 4000 [ 12%] (Warmup)

#> Chain 4 Iteration: 501 / 4000 [ 12%] (Sampling)

#> Chain 6 Iteration: 1800 / 4000 [ 45%] (Sampling)

#> Chain 6 Iteration: 1900 / 4000 [ 47%] (Sampling)

#> Chain 6 Iteration: 2000 / 4000 [ 50%] (Sampling)

#> Chain 6 Iteration: 2100 / 4000 [ 52%] (Sampling)

#> Chain 1 Iteration: 3600 / 4000 [ 90%] (Sampling)

#> Chain 1 Iteration: 3700 / 4000 [ 92%] (Sampling)

#> Chain 1 Iteration: 3800 / 4000 [ 95%] (Sampling)

#> Chain 1 Iteration: 3900 / 4000 [ 97%] (Sampling)

#> Chain 4 Iteration: 600 / 4000 [ 15%] (Sampling)

#> Chain 4 Iteration: 700 / 4000 [ 17%] (Sampling)

#> Chain 6 Iteration: 2200 / 4000 [ 55%] (Sampling)

#> Chain 6 Iteration: 2300 / 4000 [ 57%] (Sampling)

#> Chain 6 Iteration: 2400 / 4000 [ 60%] (Sampling)

#> Chain 6 Iteration: 2500 / 4000 [ 62%] (Sampling)

#> Chain 6 Iteration: 2600 / 4000 [ 65%] (Sampling)

#> Chain 1 Iteration: 4000 / 4000 [100%] (Sampling)

#> Chain 4 Iteration: 800 / 4000 [ 20%] (Sampling)

#> Chain 4 Iteration: 900 / 4000 [ 22%] (Sampling)

#> Chain 6 Iteration: 2700 / 4000 [ 67%] (Sampling)

#> Chain 6 Iteration: 2800 / 4000 [ 70%] (Sampling)

#> Chain 6 Iteration: 2900 / 4000 [ 72%] (Sampling)

#> Chain 6 Iteration: 3000 / 4000 [ 75%] (Sampling)

#> Chain 6 Iteration: 3100 / 4000 [ 77%] (Sampling)

#> Chain 1 finished in 2.9 seconds.

#> Chain 3 Iteration: 800 / 4000 [ 20%] (Sampling)

#> Chain 4 Iteration: 1000 / 4000 [ 25%] (Sampling)

#> Chain 4 Iteration: 1100 / 4000 [ 27%] (Sampling)

#> Chain 6 Iteration: 3200 / 4000 [ 80%] (Sampling)

#> Chain 6 Iteration: 3300 / 4000 [ 82%] (Sampling)

#> Chain 6 Iteration: 3400 / 4000 [ 85%] (Sampling)

#> Chain 6 Iteration: 3500 / 4000 [ 87%] (Sampling)

#> Chain 6 Iteration: 3600 / 4000 [ 90%] (Sampling)

#> Chain 4 Iteration: 1200 / 4000 [ 30%] (Sampling)

#> Chain 4 Iteration: 1300 / 4000 [ 32%] (Sampling)

#> Chain 6 Iteration: 3700 / 4000 [ 92%] (Sampling)

#> Chain 6 Iteration: 3800 / 4000 [ 95%] (Sampling)

#> Chain 6 Iteration: 3900 / 4000 [ 97%] (Sampling)

#> Chain 6 Iteration: 4000 / 4000 [100%] (Sampling)

#> Chain 6 finished in 3.0 seconds.

#> Chain 4 Iteration: 1400 / 4000 [ 35%] (Sampling)

#> Chain 4 Iteration: 1500 / 4000 [ 37%] (Sampling)

#> Chain 4 Iteration: 1600 / 4000 [ 40%] (Sampling)

#> Chain 4 Iteration: 1700 / 4000 [ 42%] (Sampling)

#> Chain 4 Iteration: 1800 / 4000 [ 45%] (Sampling)

#> Chain 3 Iteration: 900 / 4000 [ 22%] (Sampling)

#> Chain 4 Iteration: 1900 / 4000 [ 47%] (Sampling)

#> Chain 4 Iteration: 2000 / 4000 [ 50%] (Sampling)

#> Chain 4 Iteration: 2100 / 4000 [ 52%] (Sampling)

#> Chain 4 Iteration: 2200 / 4000 [ 55%] (Sampling)

#> Chain 4 Iteration: 2300 / 4000 [ 57%] (Sampling)

#> Chain 4 Iteration: 2400 / 4000 [ 60%] (Sampling)

#> Chain 4 Iteration: 2500 / 4000 [ 62%] (Sampling)

#> Chain 4 Iteration: 2600 / 4000 [ 65%] (Sampling)

#> Chain 4 Iteration: 2700 / 4000 [ 67%] (Sampling)

#> Chain 3 Iteration: 1000 / 4000 [ 25%] (Sampling)

#> Chain 4 Iteration: 2800 / 4000 [ 70%] (Sampling)

#> Chain 4 Iteration: 2900 / 4000 [ 72%] (Sampling)

#> Chain 4 Iteration: 3000 / 4000 [ 75%] (Sampling)

#> Chain 4 Iteration: 3100 / 4000 [ 77%] (Sampling)

#> Chain 4 Iteration: 3200 / 4000 [ 80%] (Sampling)

#> Chain 4 Iteration: 3300 / 4000 [ 82%] (Sampling)

#> Chain 4 Iteration: 3400 / 4000 [ 85%] (Sampling)

#> Chain 4 Iteration: 3500 / 4000 [ 87%] (Sampling)

#> Chain 4 Iteration: 3600 / 4000 [ 90%] (Sampling)

#> Chain 4 Iteration: 3700 / 4000 [ 92%] (Sampling)

#> Chain 4 Iteration: 3800 / 4000 [ 95%] (Sampling)

#> Chain 4 Iteration: 3900 / 4000 [ 97%] (Sampling)

#> Chain 4 Iteration: 4000 / 4000 [100%] (Sampling)

#> Chain 4 finished in 4.4 seconds.

#> Chain 3 Iteration: 1100 / 4000 [ 27%] (Sampling)

#> Chain 3 Iteration: 1200 / 4000 [ 30%] (Sampling)

#> Chain 3 Iteration: 1300 / 4000 [ 32%] (Sampling)

#> Chain 3 Iteration: 1400 / 4000 [ 35%] (Sampling)

#> Chain 3 Iteration: 1500 / 4000 [ 37%] (Sampling)

#> Chain 3 Iteration: 1600 / 4000 [ 40%] (Sampling)

#> Chain 3 Iteration: 1700 / 4000 [ 42%] (Sampling)

#> Chain 3 Iteration: 1800 / 4000 [ 45%] (Sampling)

#> Chain 3 Iteration: 1900 / 4000 [ 47%] (Sampling)

#> Chain 3 Iteration: 2000 / 4000 [ 50%] (Sampling)

#> Chain 3 Iteration: 2100 / 4000 [ 52%] (Sampling)

#> Chain 3 Iteration: 2200 / 4000 [ 55%] (Sampling)

#> Chain 3 Iteration: 2300 / 4000 [ 57%] (Sampling)

#> Chain 3 Iteration: 2400 / 4000 [ 60%] (Sampling)

#> Chain 3 Iteration: 2500 / 4000 [ 62%] (Sampling)

#> Chain 3 Iteration: 2600 / 4000 [ 65%] (Sampling)

#> Chain 3 Iteration: 2700 / 4000 [ 67%] (Sampling)

#> Chain 3 Iteration: 2800 / 4000 [ 70%] (Sampling)

#> Chain 3 Iteration: 2900 / 4000 [ 72%] (Sampling)

#> Chain 3 Iteration: 3000 / 4000 [ 75%] (Sampling)

#> Chain 3 Iteration: 3100 / 4000 [ 77%] (Sampling)

#> Chain 3 Iteration: 3200 / 4000 [ 80%] (Sampling)

#> Chain 3 Iteration: 3300 / 4000 [ 82%] (Sampling)

#> Chain 3 Iteration: 3400 / 4000 [ 85%] (Sampling)

#> Chain 3 Iteration: 3500 / 4000 [ 87%] (Sampling)

#> Chain 3 Iteration: 3600 / 4000 [ 90%] (Sampling)

#> Chain 3 Iteration: 3700 / 4000 [ 92%] (Sampling)

#> Chain 3 Iteration: 3800 / 4000 [ 95%] (Sampling)

#> Chain 3 Iteration: 3900 / 4000 [ 97%] (Sampling)

#> Chain 3 Iteration: 4000 / 4000 [100%] (Sampling)

#> Chain 3 finished in 18.0 seconds.

#>

#> All 6 chains finished successfully.

#> Mean chain execution time: 5.5 seconds.

#> Total execution time: 18.1 seconds.Y bueno, no ha tardado mucho, unos 3 segundos por cadena.

summary(mbrm)

#> Family: poisson

#> Links: mu = identity

#> Formula: y ~ b0 + b1 * t + b2 * (t - change) * step(t - change)

#> b0 ~ 1

#> b1 ~ 1

#> b2 ~ 1

#> change ~ 1

#> Data: df (Number of observations: 23)

#> Draws: 6 chains, each with iter = 4000; warmup = 500; thin = 1;

#> total post-warmup draws = 21000

#>

#> Population-Level Effects:

#> Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

#> b0_Intercept 50.33 22.21 1.59 69.57 1.40 13 10

#> b1_Intercept 0.01 0.01 -0.00 0.03 1.37 13 23

#> b2_Intercept -1.07 0.48 -1.93 -0.32 1.00 8488 8579

#> change_Intercept 2008.67 3.31 2002.91 2014.20 1.00 9493 7851

#>

#> Draws were sampled using sample(hmc). For each parameter, Bulk_ESS

#> and Tail_ESS are effective sample size measures, and Rhat is the potential

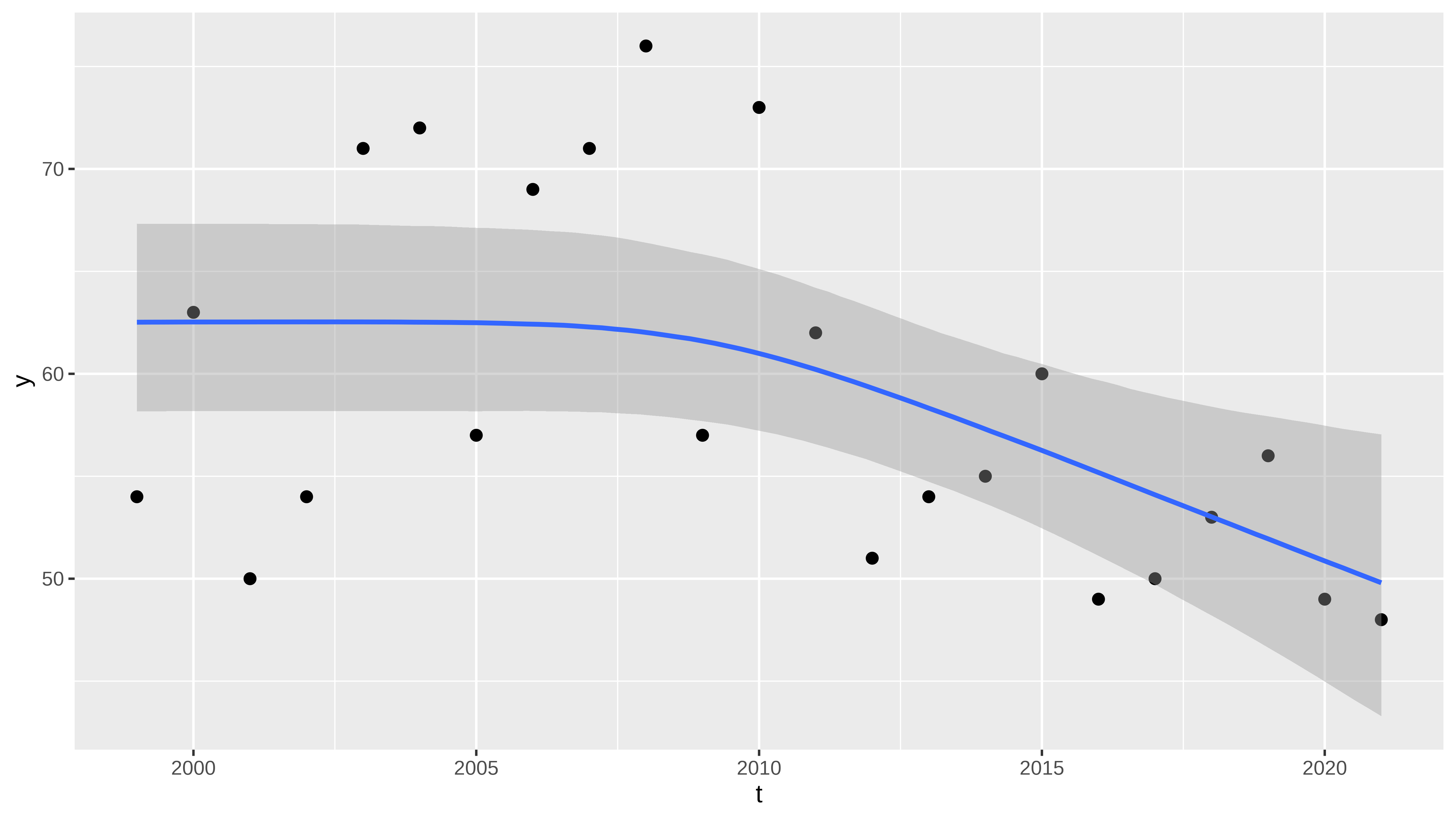

#> scale reduction factor on split chains (at convergence, Rhat = 1).Podemos pintar la curva media estimada y su intervalo de credibilidad

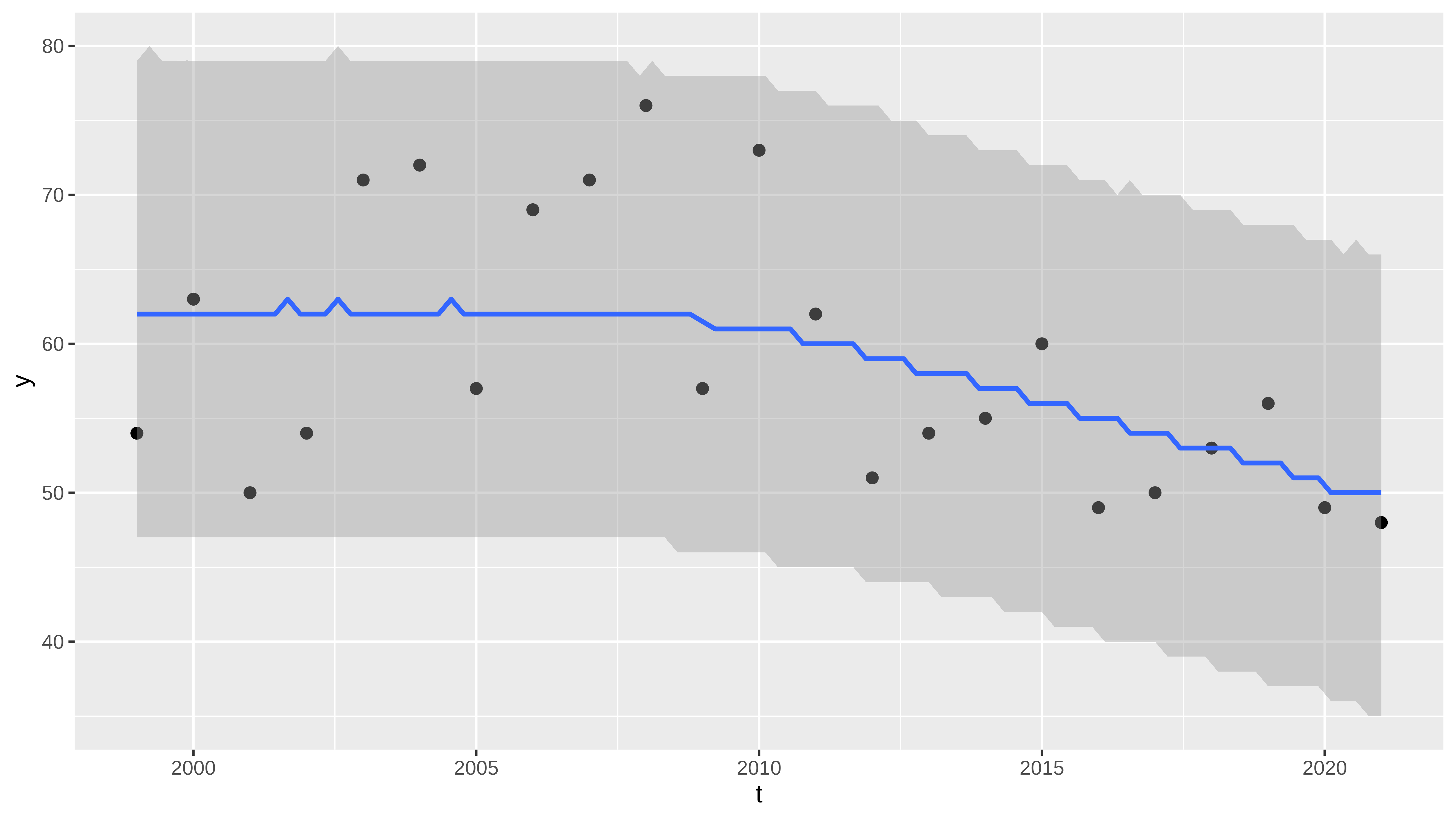

También la posterior predict function de los diferentes puntos. Evidentemente esto tiene más variabilidad que la posterior predict de la media condicionada que era el gráfico anterior.

Y básicamente, obtenermos los mismo resultados que Carlos con numpyro.

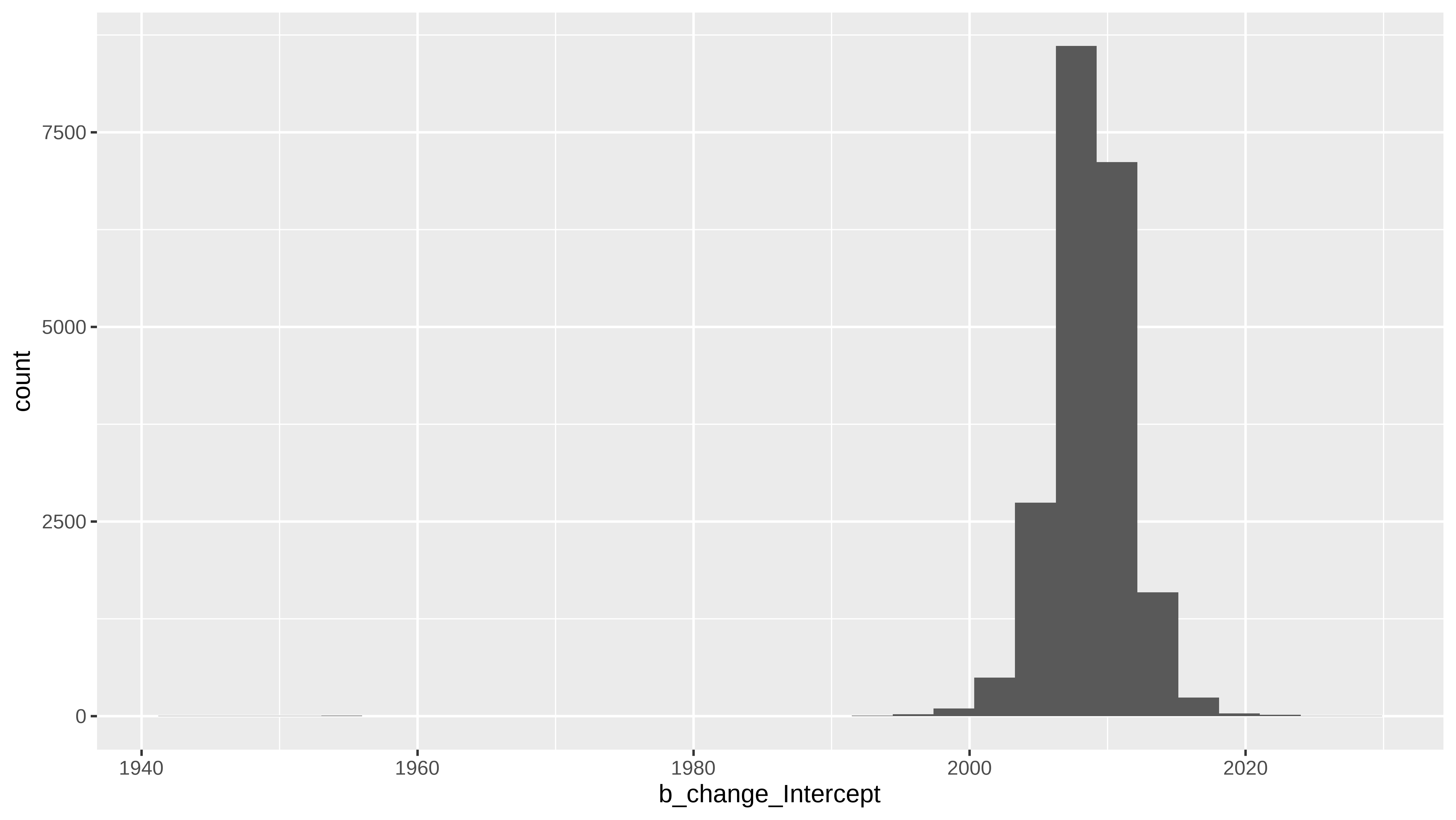

Podemos obtener un histograma de la posterior del punto de cambio

punto_cambio_posterior <- as_draws_df(mbrm, variable = "b_change_Intercept")

ggplot2::ggplot(punto_cambio_posterior, aes(x =b_change_Intercept )) +

ggplot2::geom_histogram()posterior <- as_draws_df(mbrm)

head(posterior)

#> # A draws_df: 6 iterations, 1 chains, and 6 variables

#> b_b0_Intercept b_b1_Intercept b_b2_Intercept b_change_Intercept lprior lp__

#> 1 52 0.00607 -1.33 2010 -9.0 -87

#> 2 69 -0.00512 -0.56 2006 -9.1 -89

#> 3 61 0.00087 -0.40 2006 -7.3 -87

#> 4 63 -0.00099 -0.48 2006 -7.5 -86

#> 5 59 0.00275 -1.17 2006 -7.9 -85

#> 6 58 0.00339 -1.18 2006 -8.1 -86

#> # ... hidden reserved variables {'.chain', '.iteration', '.draw'}

dim(posterior)

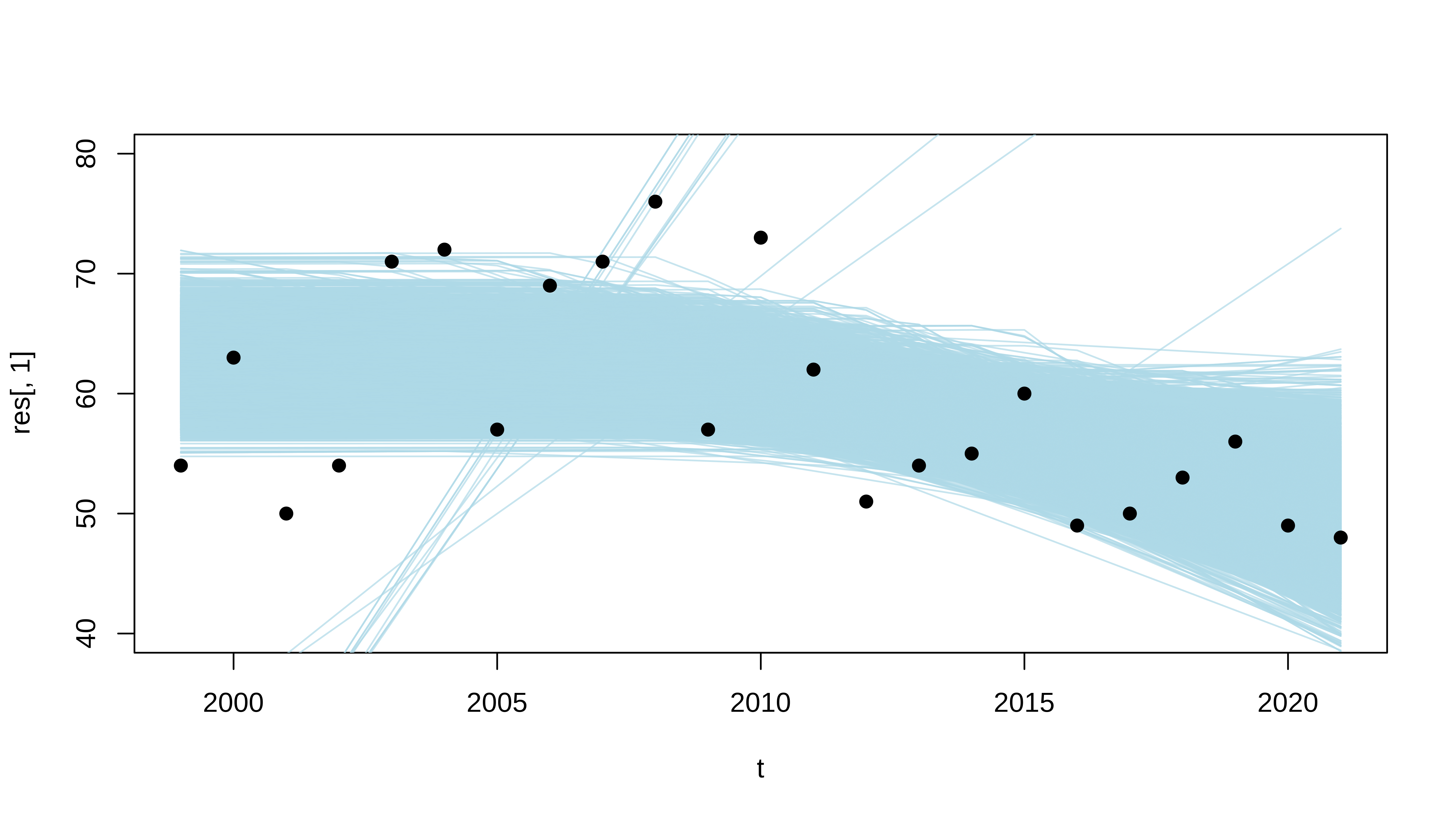

#> [1] 21000 9Pintando las posterior predict directamente

# Muy old R base, pero es que es la hora de comer y no tengo azúcar en el cerebro

n_samples <- 10000

idx_sample <- sample(1:nrow(posterior), size = n_samples, replace = TRUE)

posterior_df <- as.data.frame(posterior)

make_curve_functions <- function(fila) {

b0 <- posterior_df[fila, "b_b0_Intercept"]

b1 <- posterior_df[fila, "b_b1_Intercept"]

b2 <- posterior_df[fila, "b_b2_Intercept"]

change <- posterior_df[fila, "b_change_Intercept"]

lambda <- b0 + b1 * t + ifelse(t > change, (t-change) * b2, 0)

return(lambda)

}

t <- df$t

res <- sapply(idx_sample, make_curve_functions)

plot(t, res[,1], pch = 19, col = scales::alpha("lightblue", 0.7), ylim = c(40, 80), type = "l")

for (id in 2:n_samples) {

points(t, res[,id], pch = 19, col = scales::alpha("lightblue", 0.7), type = "l")

}

points(t, df$y, pch = 19)Una cosa interesante de brms es que nos construye código en Stan que luego nosotros podemos modificar

make_stancode(bform, data = df,prior= bprior, family = poisson("identity"))

#> // generated with brms 2.19.0

#> functions {

#> }

#> data {

#> int<lower=1> N; // total number of observations

#> int Y[N]; // response variable

#> int<lower=1> K_b0; // number of population-level effects

#> matrix[N, K_b0] X_b0; // population-level design matrix

#> int<lower=1> K_b1; // number of population-level effects

#> matrix[N, K_b1] X_b1; // population-level design matrix

#> int<lower=1> K_b2; // number of population-level effects

#> matrix[N, K_b2] X_b2; // population-level design matrix

#> int<lower=1> K_change; // number of population-level effects

#> matrix[N, K_change] X_change; // population-level design matrix

#> // covariate vectors for non-linear functions

#> int C_1[N];

#> int prior_only; // should the likelihood be ignored?

#> }

#> transformed data {

#> }

#> parameters {

#> vector[K_b0] b_b0; // population-level effects

#> vector[K_b1] b_b1; // population-level effects

#> vector[K_b2] b_b2; // population-level effects

#> vector[K_change] b_change; // population-level effects

#> }

#> transformed parameters {

#> real lprior = 0; // prior contributions to the log posterior

#> lprior += normal_lpdf(b_b0 | 60, 5);

#> lprior += normal_lpdf(b_b1 | 0, 1);

#> lprior += normal_lpdf(b_b2 | 0, 1);

#> lprior += normal_lpdf(b_change | 2010, 23/4);

#> }

#> model {

#> // likelihood including constants

#> if (!prior_only) {

#> // initialize linear predictor term

#> vector[N] nlp_b0 = rep_vector(0.0, N);

#> // initialize linear predictor term

#> vector[N] nlp_b1 = rep_vector(0.0, N);

#> // initialize linear predictor term

#> vector[N] nlp_b2 = rep_vector(0.0, N);

#> // initialize linear predictor term

#> vector[N] nlp_change = rep_vector(0.0, N);

#> // initialize non-linear predictor term

#> vector[N] mu;

#> nlp_b0 += X_b0 * b_b0;

#> nlp_b1 += X_b1 * b_b1;

#> nlp_b2 += X_b2 * b_b2;

#> nlp_change += X_change * b_change;

#> for (n in 1:N) {

#> // compute non-linear predictor values

#> mu[n] = (nlp_b0[n] + nlp_b1[n] * C_1[n] + nlp_b2[n] * (C_1[n] - nlp_change[n]) * step(C_1[n] - nlp_change[n]));

#> }

#> target += poisson_lpmf(Y | mu);

#> }

#> // priors including constants

#> target += lprior;

#> }

#> generated quantities {

#> }Notas

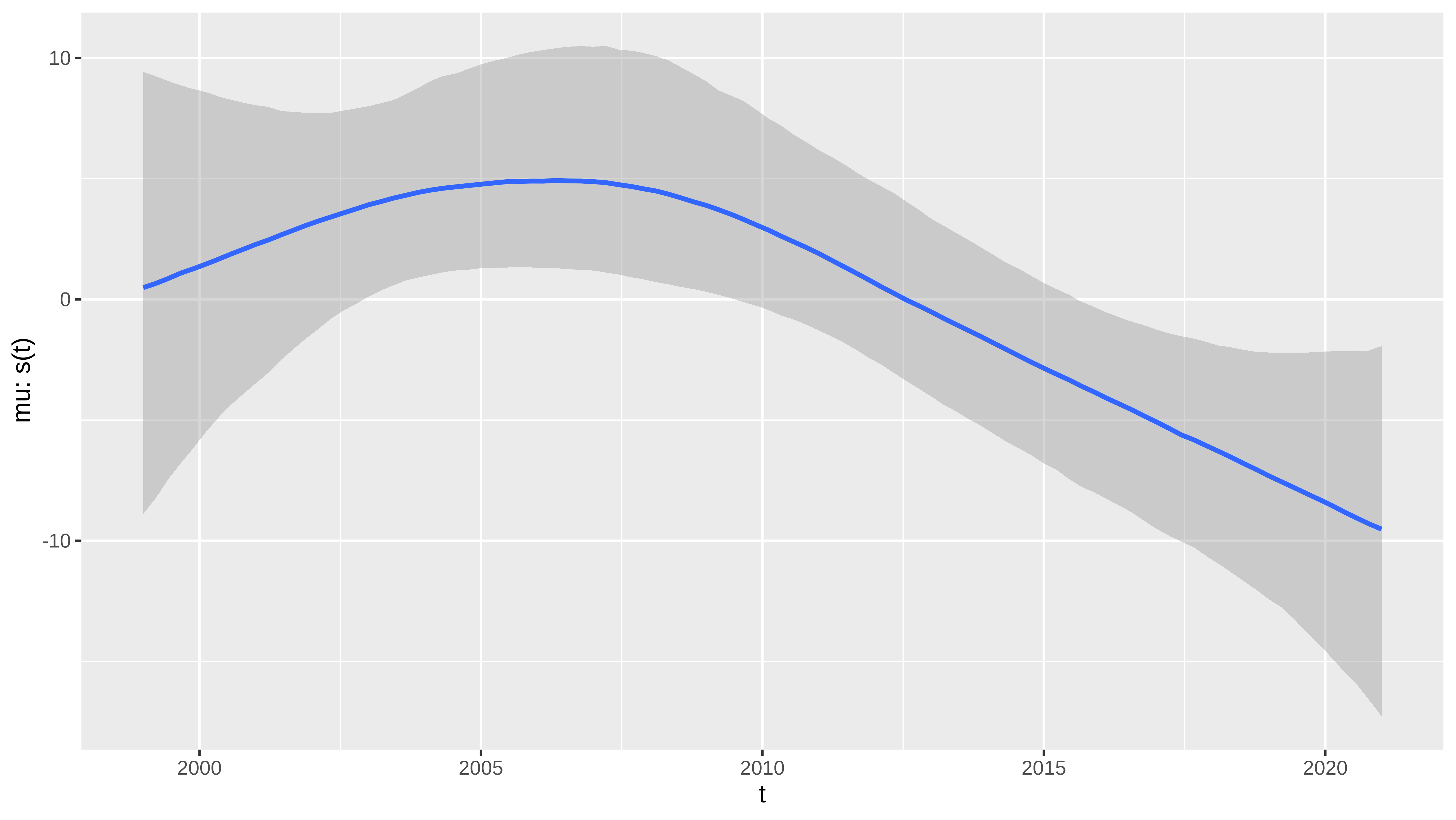

Una forma fácil de ajustar estos datos es usando splines.

m_spline <- brm(

y ~ s(t),

family = poisson("identity"),

data = df,

backend= "cmdstanr"

)

#> Running MCMC with 4 sequential chains...

#>

#> Chain 1 Iteration: 1 / 2000 [ 0%] (Warmup)

#> Chain 1 Iteration: 100 / 2000 [ 5%] (Warmup)

#> Chain 1 Iteration: 200 / 2000 [ 10%] (Warmup)

#> Chain 1 Iteration: 300 / 2000 [ 15%] (Warmup)

#> Chain 1 Iteration: 400 / 2000 [ 20%] (Warmup)

#> Chain 1 Iteration: 500 / 2000 [ 25%] (Warmup)

#> Chain 1 Iteration: 600 / 2000 [ 30%] (Warmup)

#> Chain 1 Iteration: 700 / 2000 [ 35%] (Warmup)

#> Chain 1 Iteration: 800 / 2000 [ 40%] (Warmup)

#> Chain 1 Iteration: 900 / 2000 [ 45%] (Warmup)

#> Chain 1 Iteration: 1000 / 2000 [ 50%] (Warmup)

#> Chain 1 Iteration: 1001 / 2000 [ 50%] (Sampling)

#> Chain 1 Iteration: 1100 / 2000 [ 55%] (Sampling)

#> Chain 1 Iteration: 1200 / 2000 [ 60%] (Sampling)

#> Chain 1 Iteration: 1300 / 2000 [ 65%] (Sampling)

#> Chain 1 Iteration: 1400 / 2000 [ 70%] (Sampling)

#> Chain 1 Iteration: 1500 / 2000 [ 75%] (Sampling)

#> Chain 1 Iteration: 1600 / 2000 [ 80%] (Sampling)

#> Chain 1 Iteration: 1700 / 2000 [ 85%] (Sampling)

#> Chain 1 Iteration: 1800 / 2000 [ 90%] (Sampling)

#> Chain 1 Iteration: 1900 / 2000 [ 95%] (Sampling)

#> Chain 1 Iteration: 2000 / 2000 [100%] (Sampling)

#> Chain 1 finished in 0.3 seconds.

#> Chain 2 Iteration: 1 / 2000 [ 0%] (Warmup)

#> Chain 2 Iteration: 100 / 2000 [ 5%] (Warmup)

#> Chain 2 Iteration: 200 / 2000 [ 10%] (Warmup)

#> Chain 2 Iteration: 300 / 2000 [ 15%] (Warmup)

#> Chain 2 Iteration: 400 / 2000 [ 20%] (Warmup)

#> Chain 2 Iteration: 500 / 2000 [ 25%] (Warmup)

#> Chain 2 Iteration: 600 / 2000 [ 30%] (Warmup)

#> Chain 2 Iteration: 700 / 2000 [ 35%] (Warmup)

#> Chain 2 Iteration: 800 / 2000 [ 40%] (Warmup)

#> Chain 2 Iteration: 900 / 2000 [ 45%] (Warmup)

#> Chain 2 Iteration: 1000 / 2000 [ 50%] (Warmup)

#> Chain 2 Iteration: 1001 / 2000 [ 50%] (Sampling)

#> Chain 2 Iteration: 1100 / 2000 [ 55%] (Sampling)

#> Chain 2 Iteration: 1200 / 2000 [ 60%] (Sampling)

#> Chain 2 Iteration: 1300 / 2000 [ 65%] (Sampling)

#> Chain 2 Iteration: 1400 / 2000 [ 70%] (Sampling)

#> Chain 2 Iteration: 1500 / 2000 [ 75%] (Sampling)

#> Chain 2 Iteration: 1600 / 2000 [ 80%] (Sampling)

#> Chain 2 Iteration: 1700 / 2000 [ 85%] (Sampling)

#> Chain 2 Iteration: 1800 / 2000 [ 90%] (Sampling)

#> Chain 2 Iteration: 1900 / 2000 [ 95%] (Sampling)

#> Chain 2 Iteration: 2000 / 2000 [100%] (Sampling)

#> Chain 2 finished in 0.4 seconds.

#> Chain 3 Iteration: 1 / 2000 [ 0%] (Warmup)

#> Chain 3 Iteration: 100 / 2000 [ 5%] (Warmup)

#> Chain 3 Iteration: 200 / 2000 [ 10%] (Warmup)

#> Chain 3 Iteration: 300 / 2000 [ 15%] (Warmup)

#> Chain 3 Iteration: 400 / 2000 [ 20%] (Warmup)

#> Chain 3 Iteration: 500 / 2000 [ 25%] (Warmup)

#> Chain 3 Iteration: 600 / 2000 [ 30%] (Warmup)

#> Chain 3 Iteration: 700 / 2000 [ 35%] (Warmup)

#> Chain 3 Iteration: 800 / 2000 [ 40%] (Warmup)

#> Chain 3 Iteration: 900 / 2000 [ 45%] (Warmup)

#> Chain 3 Iteration: 1000 / 2000 [ 50%] (Warmup)

#> Chain 3 Iteration: 1001 / 2000 [ 50%] (Sampling)

#> Chain 3 Iteration: 1100 / 2000 [ 55%] (Sampling)

#> Chain 3 Iteration: 1200 / 2000 [ 60%] (Sampling)

#> Chain 3 Iteration: 1300 / 2000 [ 65%] (Sampling)

#> Chain 3 Iteration: 1400 / 2000 [ 70%] (Sampling)

#> Chain 3 Iteration: 1500 / 2000 [ 75%] (Sampling)

#> Chain 3 Iteration: 1600 / 2000 [ 80%] (Sampling)

#> Chain 3 Iteration: 1700 / 2000 [ 85%] (Sampling)

#> Chain 3 Iteration: 1800 / 2000 [ 90%] (Sampling)

#> Chain 3 Iteration: 1900 / 2000 [ 95%] (Sampling)

#> Chain 3 Iteration: 2000 / 2000 [100%] (Sampling)

#> Chain 3 finished in 0.3 seconds.

#> Chain 4 Iteration: 1 / 2000 [ 0%] (Warmup)

#> Chain 4 Iteration: 100 / 2000 [ 5%] (Warmup)

#> Chain 4 Iteration: 200 / 2000 [ 10%] (Warmup)

#> Chain 4 Iteration: 300 / 2000 [ 15%] (Warmup)

#> Chain 4 Iteration: 400 / 2000 [ 20%] (Warmup)

#> Chain 4 Iteration: 500 / 2000 [ 25%] (Warmup)

#> Chain 4 Iteration: 600 / 2000 [ 30%] (Warmup)

#> Chain 4 Iteration: 700 / 2000 [ 35%] (Warmup)

#> Chain 4 Iteration: 800 / 2000 [ 40%] (Warmup)

#> Chain 4 Iteration: 900 / 2000 [ 45%] (Warmup)

#> Chain 4 Iteration: 1000 / 2000 [ 50%] (Warmup)

#> Chain 4 Iteration: 1001 / 2000 [ 50%] (Sampling)

#> Chain 4 Iteration: 1100 / 2000 [ 55%] (Sampling)

#> Chain 4 Iteration: 1200 / 2000 [ 60%] (Sampling)

#> Chain 4 Iteration: 1300 / 2000 [ 65%] (Sampling)

#> Chain 4 Iteration: 1400 / 2000 [ 70%] (Sampling)

#> Chain 4 Iteration: 1500 / 2000 [ 75%] (Sampling)

#> Chain 4 Iteration: 1600 / 2000 [ 80%] (Sampling)

#> Chain 4 Iteration: 1700 / 2000 [ 85%] (Sampling)

#> Chain 4 Iteration: 1800 / 2000 [ 90%] (Sampling)

#> Chain 4 Iteration: 1900 / 2000 [ 95%] (Sampling)

#> Chain 4 Iteration: 2000 / 2000 [100%] (Sampling)

#> Chain 4 finished in 0.3 seconds.

#>

#> All 4 chains finished successfully.

#> Mean chain execution time: 0.3 seconds.

#> Total execution time: 1.9 seconds.

summary(m_spline)

#> Family: poisson

#> Links: mu = identity

#> Formula: y ~ s(t)

#> Data: df (Number of observations: 23)

#> Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

#> total post-warmup draws = 4000

#>

#> Smooth Terms:

#> Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

#> sds(st_1) 12.78 7.77 1.41 30.74 1.00 1376 1528

#>

#> Population-Level Effects:

#> Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

#> Intercept 58.83 1.57 55.82 61.89 1.00 3590 2668

#> st_1 -3.76 31.71 -56.38 67.67 1.00 906 572

#>

#> Draws were sampled using sample(hmc). For each parameter, Bulk_ESS

#> and Tail_ESS are effective sample size measures, and Rhat is the potential

#> scale reduction factor on split chains (at convergence, Rhat = 1).

plot(conditional_smooths(m_spline, method = "posterior_predict")

) Y tendríamos la posterior del spline , pero la verdad, ahora no tengo ganas de buscar como interpretar ese spline para traducir a punto de cambio de tendencia.

Por otro lado, leyendo por ahí he visto una implementación un pelín diferente

bform_alt <- bf(

y ~ b0 +

# aqui es donde cambia un poco

b1 * (t - change) * step(change - t) +

# post cambio

b2 * (t - change) * step(t - change),

b0 + b1 + b2 + change ~ 1,

nl = TRUE

)

bprior <- prior(normal(60, 5), nlpar = "b0") +

prior(normal(0, 1), nlpar = "b1") +

prior(normal(0, 1), nlpar = "b2") +

prior(normal(2010, 23 / 4), nlpar = "change")

mbrm_alt <-

brm(

bform_alt, family = poisson("identity"),

prior = bprior,

data = df,

backend = "cmdstanr",

cores = 6,

chains = 6,

iter = 4000,

warmup = 500

)

#> Running MCMC with 6 parallel chains...

#>

#> Chain 1 Iteration: 1 / 4000 [ 0%] (Warmup)

#> Chain 1 Iteration: 100 / 4000 [ 2%] (Warmup)

#> Chain 2 Iteration: 1 / 4000 [ 0%] (Warmup)

#> Chain 2 Iteration: 100 / 4000 [ 2%] (Warmup)

#> Chain 3 Iteration: 1 / 4000 [ 0%] (Warmup)

#> Chain 4 Iteration: 1 / 4000 [ 0%] (Warmup)

#> Chain 5 Iteration: 1 / 4000 [ 0%] (Warmup)

#> Chain 6 Iteration: 1 / 4000 [ 0%] (Warmup)

#> Chain 1 Iteration: 200 / 4000 [ 5%] (Warmup)

#> Chain 2 Iteration: 200 / 4000 [ 5%] (Warmup)

#> Chain 3 Iteration: 100 / 4000 [ 2%] (Warmup)

#> Chain 3 Iteration: 200 / 4000 [ 5%] (Warmup)

#> Chain 4 Iteration: 100 / 4000 [ 2%] (Warmup)

#> Chain 5 Iteration: 100 / 4000 [ 2%] (Warmup)

#> Chain 5 Iteration: 200 / 4000 [ 5%] (Warmup)

#> Chain 6 Iteration: 100 / 4000 [ 2%] (Warmup)

#> Chain 6 Iteration: 200 / 4000 [ 5%] (Warmup)

#> Chain 4 Iteration: 200 / 4000 [ 5%] (Warmup)

#> Chain 1 Iteration: 300 / 4000 [ 7%] (Warmup)

#> Chain 1 Iteration: 400 / 4000 [ 10%] (Warmup)

#> Chain 1 Iteration: 500 / 4000 [ 12%] (Warmup)

#> Chain 1 Iteration: 501 / 4000 [ 12%] (Sampling)

#> Chain 5 Iteration: 300 / 4000 [ 7%] (Warmup)

#> Chain 1 Iteration: 600 / 4000 [ 15%] (Sampling)

#> Chain 1 Iteration: 700 / 4000 [ 17%] (Sampling)

#> Chain 1 Iteration: 800 / 4000 [ 20%] (Sampling)

#> Chain 1 Iteration: 900 / 4000 [ 22%] (Sampling)

#> Chain 1 Iteration: 1000 / 4000 [ 25%] (Sampling)

#> Chain 1 Iteration: 1100 / 4000 [ 27%] (Sampling)

#> Chain 1 Iteration: 1200 / 4000 [ 30%] (Sampling)

#> Chain 3 Iteration: 300 / 4000 [ 7%] (Warmup)

#> Chain 1 Iteration: 1300 / 4000 [ 32%] (Sampling)

#> Chain 1 Iteration: 1400 / 4000 [ 35%] (Sampling)

#> Chain 1 Iteration: 1500 / 4000 [ 37%] (Sampling)

#> Chain 1 Iteration: 1600 / 4000 [ 40%] (Sampling)

#> Chain 1 Iteration: 1700 / 4000 [ 42%] (Sampling)

#> Chain 1 Iteration: 1800 / 4000 [ 45%] (Sampling)

#> Chain 1 Iteration: 1900 / 4000 [ 47%] (Sampling)

#> Chain 2 Iteration: 300 / 4000 [ 7%] (Warmup)

#> Chain 3 Iteration: 400 / 4000 [ 10%] (Warmup)

#> Chain 5 Iteration: 400 / 4000 [ 10%] (Warmup)

#> Chain 6 Iteration: 300 / 4000 [ 7%] (Warmup)

#> Chain 1 Iteration: 2000 / 4000 [ 50%] (Sampling)

#> Chain 1 Iteration: 2100 / 4000 [ 52%] (Sampling)

#> Chain 1 Iteration: 2200 / 4000 [ 55%] (Sampling)

#> Chain 1 Iteration: 2300 / 4000 [ 57%] (Sampling)

#> Chain 1 Iteration: 2400 / 4000 [ 60%] (Sampling)

#> Chain 1 Iteration: 2500 / 4000 [ 62%] (Sampling)

#> Chain 3 Iteration: 500 / 4000 [ 12%] (Warmup)

#> Chain 3 Iteration: 501 / 4000 [ 12%] (Sampling)

#> Chain 3 Iteration: 600 / 4000 [ 15%] (Sampling)

#> Chain 3 Iteration: 700 / 4000 [ 17%] (Sampling)

#> Chain 5 Iteration: 500 / 4000 [ 12%] (Warmup)

#> Chain 5 Iteration: 501 / 4000 [ 12%] (Sampling)

#> Chain 5 Iteration: 600 / 4000 [ 15%] (Sampling)

#> Chain 5 Iteration: 700 / 4000 [ 17%] (Sampling)

#> Chain 6 Iteration: 400 / 4000 [ 10%] (Warmup)

#> Chain 1 Iteration: 2600 / 4000 [ 65%] (Sampling)

#> Chain 1 Iteration: 2700 / 4000 [ 67%] (Sampling)

#> Chain 1 Iteration: 2800 / 4000 [ 70%] (Sampling)

#> Chain 1 Iteration: 2900 / 4000 [ 72%] (Sampling)

#> Chain 1 Iteration: 3000 / 4000 [ 75%] (Sampling)

#> Chain 1 Iteration: 3100 / 4000 [ 77%] (Sampling)

#> Chain 1 Iteration: 3200 / 4000 [ 80%] (Sampling)

#> Chain 3 Iteration: 800 / 4000 [ 20%] (Sampling)

#> Chain 3 Iteration: 900 / 4000 [ 22%] (Sampling)

#> Chain 3 Iteration: 1000 / 4000 [ 25%] (Sampling)

#> Chain 3 Iteration: 1100 / 4000 [ 27%] (Sampling)

#> Chain 3 Iteration: 1200 / 4000 [ 30%] (Sampling)

#> Chain 4 Iteration: 300 / 4000 [ 7%] (Warmup)

#> Chain 4 Iteration: 400 / 4000 [ 10%] (Warmup)

#> Chain 5 Iteration: 800 / 4000 [ 20%] (Sampling)

#> Chain 5 Iteration: 900 / 4000 [ 22%] (Sampling)

#> Chain 5 Iteration: 1000 / 4000 [ 25%] (Sampling)

#> Chain 5 Iteration: 1100 / 4000 [ 27%] (Sampling)

#> Chain 6 Iteration: 500 / 4000 [ 12%] (Warmup)

#> Chain 6 Iteration: 501 / 4000 [ 12%] (Sampling)

#> Chain 6 Iteration: 600 / 4000 [ 15%] (Sampling)

#> Chain 6 Iteration: 700 / 4000 [ 17%] (Sampling)

#> Chain 6 Iteration: 800 / 4000 [ 20%] (Sampling)

#> Chain 6 Iteration: 900 / 4000 [ 22%] (Sampling)

#> Chain 1 Iteration: 3300 / 4000 [ 82%] (Sampling)

#> Chain 1 Iteration: 3400 / 4000 [ 85%] (Sampling)

#> Chain 1 Iteration: 3500 / 4000 [ 87%] (Sampling)

#> Chain 1 Iteration: 3600 / 4000 [ 90%] (Sampling)

#> Chain 1 Iteration: 3700 / 4000 [ 92%] (Sampling)

#> Chain 1 Iteration: 3800 / 4000 [ 95%] (Sampling)

#> Chain 1 Iteration: 3900 / 4000 [ 97%] (Sampling)

#> Chain 3 Iteration: 1300 / 4000 [ 32%] (Sampling)

#> Chain 3 Iteration: 1400 / 4000 [ 35%] (Sampling)

#> Chain 3 Iteration: 1500 / 4000 [ 37%] (Sampling)

#> Chain 3 Iteration: 1600 / 4000 [ 40%] (Sampling)

#> Chain 3 Iteration: 1700 / 4000 [ 42%] (Sampling)

#> Chain 3 Iteration: 1800 / 4000 [ 45%] (Sampling)

#> Chain 4 Iteration: 500 / 4000 [ 12%] (Warmup)

#> Chain 4 Iteration: 501 / 4000 [ 12%] (Sampling)

#> Chain 4 Iteration: 600 / 4000 [ 15%] (Sampling)

#> Chain 4 Iteration: 700 / 4000 [ 17%] (Sampling)

#> Chain 4 Iteration: 800 / 4000 [ 20%] (Sampling)

#> Chain 4 Iteration: 900 / 4000 [ 22%] (Sampling)

#> Chain 5 Iteration: 1200 / 4000 [ 30%] (Sampling)

#> Chain 5 Iteration: 1300 / 4000 [ 32%] (Sampling)

#> Chain 5 Iteration: 1400 / 4000 [ 35%] (Sampling)

#> Chain 5 Iteration: 1500 / 4000 [ 37%] (Sampling)

#> Chain 5 Iteration: 1600 / 4000 [ 40%] (Sampling)

#> Chain 6 Iteration: 1000 / 4000 [ 25%] (Sampling)

#> Chain 6 Iteration: 1100 / 4000 [ 27%] (Sampling)

#> Chain 6 Iteration: 1200 / 4000 [ 30%] (Sampling)

#> Chain 6 Iteration: 1300 / 4000 [ 32%] (Sampling)

#> Chain 6 Iteration: 1400 / 4000 [ 35%] (Sampling)

#> Chain 6 Iteration: 1500 / 4000 [ 37%] (Sampling)

#> Chain 1 Iteration: 4000 / 4000 [100%] (Sampling)

#> Chain 1 finished in 1.4 seconds.

#> Chain 2 Iteration: 400 / 4000 [ 10%] (Warmup)

#> Chain 3 Iteration: 1900 / 4000 [ 47%] (Sampling)

#> Chain 3 Iteration: 2000 / 4000 [ 50%] (Sampling)

#> Chain 3 Iteration: 2100 / 4000 [ 52%] (Sampling)

#> Chain 3 Iteration: 2200 / 4000 [ 55%] (Sampling)

#> Chain 3 Iteration: 2300 / 4000 [ 57%] (Sampling)

#> Chain 3 Iteration: 2400 / 4000 [ 60%] (Sampling)

#> Chain 4 Iteration: 1000 / 4000 [ 25%] (Sampling)

#> Chain 4 Iteration: 1100 / 4000 [ 27%] (Sampling)

#> Chain 4 Iteration: 1200 / 4000 [ 30%] (Sampling)

#> Chain 4 Iteration: 1300 / 4000 [ 32%] (Sampling)

#> Chain 4 Iteration: 1400 / 4000 [ 35%] (Sampling)

#> Chain 5 Iteration: 1700 / 4000 [ 42%] (Sampling)

#> Chain 5 Iteration: 1800 / 4000 [ 45%] (Sampling)

#> Chain 5 Iteration: 1900 / 4000 [ 47%] (Sampling)

#> Chain 5 Iteration: 2000 / 4000 [ 50%] (Sampling)

#> Chain 5 Iteration: 2100 / 4000 [ 52%] (Sampling)

#> Chain 6 Iteration: 1600 / 4000 [ 40%] (Sampling)

#> Chain 6 Iteration: 1700 / 4000 [ 42%] (Sampling)

#> Chain 6 Iteration: 1800 / 4000 [ 45%] (Sampling)

#> Chain 6 Iteration: 1900 / 4000 [ 47%] (Sampling)

#> Chain 6 Iteration: 2000 / 4000 [ 50%] (Sampling)

#> Chain 3 Iteration: 2500 / 4000 [ 62%] (Sampling)

#> Chain 3 Iteration: 2600 / 4000 [ 65%] (Sampling)

#> Chain 3 Iteration: 2700 / 4000 [ 67%] (Sampling)

#> Chain 3 Iteration: 2800 / 4000 [ 70%] (Sampling)

#> Chain 3 Iteration: 2900 / 4000 [ 72%] (Sampling)

#> Chain 3 Iteration: 3000 / 4000 [ 75%] (Sampling)

#> Chain 4 Iteration: 1500 / 4000 [ 37%] (Sampling)

#> Chain 4 Iteration: 1600 / 4000 [ 40%] (Sampling)

#> Chain 4 Iteration: 1700 / 4000 [ 42%] (Sampling)

#> Chain 4 Iteration: 1800 / 4000 [ 45%] (Sampling)

#> Chain 4 Iteration: 1900 / 4000 [ 47%] (Sampling)

#> Chain 4 Iteration: 2000 / 4000 [ 50%] (Sampling)

#> Chain 5 Iteration: 2200 / 4000 [ 55%] (Sampling)

#> Chain 5 Iteration: 2300 / 4000 [ 57%] (Sampling)

#> Chain 5 Iteration: 2400 / 4000 [ 60%] (Sampling)

#> Chain 5 Iteration: 2500 / 4000 [ 62%] (Sampling)

#> Chain 5 Iteration: 2600 / 4000 [ 65%] (Sampling)

#> Chain 5 Iteration: 2700 / 4000 [ 67%] (Sampling)

#> Chain 6 Iteration: 2100 / 4000 [ 52%] (Sampling)

#> Chain 6 Iteration: 2200 / 4000 [ 55%] (Sampling)

#> Chain 6 Iteration: 2300 / 4000 [ 57%] (Sampling)

#> Chain 6 Iteration: 2400 / 4000 [ 60%] (Sampling)

#> Chain 6 Iteration: 2500 / 4000 [ 62%] (Sampling)

#> Chain 6 Iteration: 2600 / 4000 [ 65%] (Sampling)

#> Chain 6 Iteration: 2700 / 4000 [ 67%] (Sampling)

#> Chain 2 Iteration: 500 / 4000 [ 12%] (Warmup)

#> Chain 2 Iteration: 501 / 4000 [ 12%] (Sampling)

#> Chain 2 Iteration: 600 / 4000 [ 15%] (Sampling)

#> Chain 2 Iteration: 700 / 4000 [ 17%] (Sampling)

#> Chain 2 Iteration: 800 / 4000 [ 20%] (Sampling)

#> Chain 2 Iteration: 900 / 4000 [ 22%] (Sampling)

#> Chain 2 Iteration: 1000 / 4000 [ 25%] (Sampling)

#> Chain 2 Iteration: 1100 / 4000 [ 27%] (Sampling)

#> Chain 3 Iteration: 3100 / 4000 [ 77%] (Sampling)

#> Chain 3 Iteration: 3200 / 4000 [ 80%] (Sampling)

#> Chain 3 Iteration: 3300 / 4000 [ 82%] (Sampling)

#> Chain 3 Iteration: 3400 / 4000 [ 85%] (Sampling)

#> Chain 3 Iteration: 3500 / 4000 [ 87%] (Sampling)

#> Chain 3 Iteration: 3600 / 4000 [ 90%] (Sampling)

#> Chain 4 Iteration: 2100 / 4000 [ 52%] (Sampling)

#> Chain 4 Iteration: 2200 / 4000 [ 55%] (Sampling)

#> Chain 4 Iteration: 2300 / 4000 [ 57%] (Sampling)

#> Chain 4 Iteration: 2400 / 4000 [ 60%] (Sampling)

#> Chain 4 Iteration: 2500 / 4000 [ 62%] (Sampling)

#> Chain 4 Iteration: 2600 / 4000 [ 65%] (Sampling)

#> Chain 5 Iteration: 2800 / 4000 [ 70%] (Sampling)

#> Chain 5 Iteration: 2900 / 4000 [ 72%] (Sampling)

#> Chain 5 Iteration: 3000 / 4000 [ 75%] (Sampling)

#> Chain 5 Iteration: 3100 / 4000 [ 77%] (Sampling)

#> Chain 5 Iteration: 3200 / 4000 [ 80%] (Sampling)

#> Chain 6 Iteration: 2800 / 4000 [ 70%] (Sampling)

#> Chain 6 Iteration: 2900 / 4000 [ 72%] (Sampling)

#> Chain 6 Iteration: 3000 / 4000 [ 75%] (Sampling)

#> Chain 6 Iteration: 3100 / 4000 [ 77%] (Sampling)

#> Chain 6 Iteration: 3200 / 4000 [ 80%] (Sampling)

#> Chain 6 Iteration: 3300 / 4000 [ 82%] (Sampling)

#> Chain 2 Iteration: 1200 / 4000 [ 30%] (Sampling)

#> Chain 2 Iteration: 1300 / 4000 [ 32%] (Sampling)

#> Chain 2 Iteration: 1400 / 4000 [ 35%] (Sampling)

#> Chain 2 Iteration: 1500 / 4000 [ 37%] (Sampling)

#> Chain 2 Iteration: 1600 / 4000 [ 40%] (Sampling)

#> Chain 2 Iteration: 1700 / 4000 [ 42%] (Sampling)

#> Chain 2 Iteration: 1800 / 4000 [ 45%] (Sampling)

#> Chain 2 Iteration: 1900 / 4000 [ 47%] (Sampling)

#> Chain 3 Iteration: 3700 / 4000 [ 92%] (Sampling)

#> Chain 3 Iteration: 3800 / 4000 [ 95%] (Sampling)

#> Chain 3 Iteration: 3900 / 4000 [ 97%] (Sampling)

#> Chain 3 Iteration: 4000 / 4000 [100%] (Sampling)

#> Chain 4 Iteration: 2700 / 4000 [ 67%] (Sampling)

#> Chain 4 Iteration: 2800 / 4000 [ 70%] (Sampling)

#> Chain 4 Iteration: 2900 / 4000 [ 72%] (Sampling)

#> Chain 4 Iteration: 3000 / 4000 [ 75%] (Sampling)

#> Chain 4 Iteration: 3100 / 4000 [ 77%] (Sampling)

#> Chain 5 Iteration: 3300 / 4000 [ 82%] (Sampling)

#> Chain 5 Iteration: 3400 / 4000 [ 85%] (Sampling)

#> Chain 5 Iteration: 3500 / 4000 [ 87%] (Sampling)

#> Chain 5 Iteration: 3600 / 4000 [ 90%] (Sampling)

#> Chain 5 Iteration: 3700 / 4000 [ 92%] (Sampling)

#> Chain 5 Iteration: 3800 / 4000 [ 95%] (Sampling)

#> Chain 6 Iteration: 3400 / 4000 [ 85%] (Sampling)

#> Chain 6 Iteration: 3500 / 4000 [ 87%] (Sampling)

#> Chain 6 Iteration: 3600 / 4000 [ 90%] (Sampling)

#> Chain 6 Iteration: 3700 / 4000 [ 92%] (Sampling)

#> Chain 6 Iteration: 3800 / 4000 [ 95%] (Sampling)

#> Chain 6 Iteration: 3900 / 4000 [ 97%] (Sampling)

#> Chain 3 finished in 1.7 seconds.

#> Chain 2 Iteration: 2000 / 4000 [ 50%] (Sampling)

#> Chain 2 Iteration: 2100 / 4000 [ 52%] (Sampling)

#> Chain 2 Iteration: 2200 / 4000 [ 55%] (Sampling)

#> Chain 2 Iteration: 2300 / 4000 [ 57%] (Sampling)

#> Chain 2 Iteration: 2400 / 4000 [ 60%] (Sampling)

#> Chain 2 Iteration: 2500 / 4000 [ 62%] (Sampling)

#> Chain 2 Iteration: 2600 / 4000 [ 65%] (Sampling)

#> Chain 2 Iteration: 2700 / 4000 [ 67%] (Sampling)

#> Chain 4 Iteration: 3200 / 4000 [ 80%] (Sampling)

#> Chain 4 Iteration: 3300 / 4000 [ 82%] (Sampling)

#> Chain 4 Iteration: 3400 / 4000 [ 85%] (Sampling)

#> Chain 4 Iteration: 3500 / 4000 [ 87%] (Sampling)

#> Chain 4 Iteration: 3600 / 4000 [ 90%] (Sampling)

#> Chain 4 Iteration: 3700 / 4000 [ 92%] (Sampling)

#> Chain 5 Iteration: 3900 / 4000 [ 97%] (Sampling)

#> Chain 5 Iteration: 4000 / 4000 [100%] (Sampling)

#> Chain 6 Iteration: 4000 / 4000 [100%] (Sampling)

#> Chain 5 finished in 1.7 seconds.

#> Chain 6 finished in 1.6 seconds.

#> Chain 2 Iteration: 2800 / 4000 [ 70%] (Sampling)

#> Chain 2 Iteration: 2900 / 4000 [ 72%] (Sampling)

#> Chain 2 Iteration: 3000 / 4000 [ 75%] (Sampling)

#> Chain 2 Iteration: 3100 / 4000 [ 77%] (Sampling)

#> Chain 2 Iteration: 3200 / 4000 [ 80%] (Sampling)

#> Chain 2 Iteration: 3300 / 4000 [ 82%] (Sampling)

#> Chain 2 Iteration: 3400 / 4000 [ 85%] (Sampling)

#> Chain 4 Iteration: 3800 / 4000 [ 95%] (Sampling)

#> Chain 4 Iteration: 3900 / 4000 [ 97%] (Sampling)

#> Chain 4 Iteration: 4000 / 4000 [100%] (Sampling)

#> Chain 4 finished in 1.8 seconds.

#> Chain 2 Iteration: 3500 / 4000 [ 87%] (Sampling)

#> Chain 2 Iteration: 3600 / 4000 [ 90%] (Sampling)

#> Chain 2 Iteration: 3700 / 4000 [ 92%] (Sampling)

#> Chain 2 Iteration: 3800 / 4000 [ 95%] (Sampling)

#> Chain 2 Iteration: 3900 / 4000 [ 97%] (Sampling)

#> Chain 2 Iteration: 4000 / 4000 [100%] (Sampling)

#> Chain 2 finished in 2.0 seconds.

#>

#> All 6 chains finished successfully.

#> Mean chain execution time: 1.7 seconds.

#> Total execution time: 2.2 seconds.Y sale prácticamente lo mismo

summary(mbrm_alt)

#> Family: poisson

#> Links: mu = identity

#> Formula: y ~ b0 + b1 * (t - change) * step(change - t) + b2 * (t - change) * step(t - change)

#> b0 ~ 1

#> b1 ~ 1

#> b2 ~ 1

#> change ~ 1

#> Data: df (Number of observations: 23)

#> Draws: 6 chains, each with iter = 4000; warmup = 500; thin = 1;

#> total post-warmup draws = 21000

#>

#> Population-Level Effects:

#> Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

#> b0_Intercept 63.38 2.92 57.00 68.66 1.00 7444 7067

#> b1_Intercept 0.50 0.68 -0.71 1.92 1.00 6217 7902

#> b2_Intercept -1.05 0.42 -1.90 -0.27 1.00 8058 8853

#> change_Intercept 2007.95 2.88 2002.84 2014.69 1.00 7429 6453

#>

#> Draws were sampled using sample(hmc). For each parameter, Bulk_ESS

#> and Tail_ESS are effective sample size measures, and Rhat is the potential

#> scale reduction factor on split chains (at convergence, Rhat = 1).Me queda pendiente hacerlo con Turing.jl Saludos